You are here: GSI Wiki>Personalpages Web>WikiUsers>ChristopherHuhn>ChristosTalks>SargeUpgradeTalk20060711 (2022-02-21, ChristopherHuhn)Edit Attach

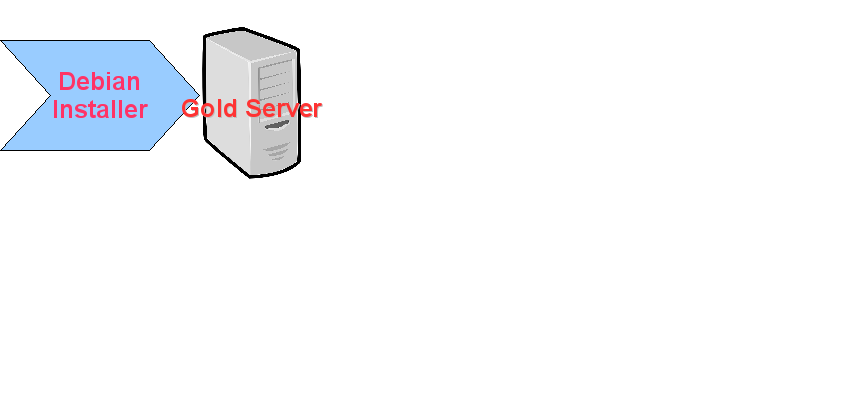

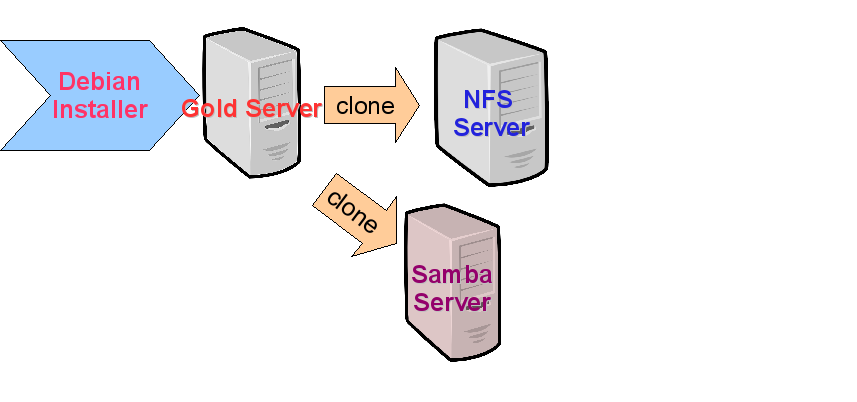

Woody installation

- Woody installation 2002/2003

- Server installation: cloning over NFS

- One size fits all

Woody installation

- Configuration management: shell scripts

- Who dunnit? No revision control, no one to blame

- Centralisation achieved by central NFS mount

/usr/local - Sparse documentation

- Know-how often only implicit

- Administration techniques don't scale well

- particularly in relation to the number of admins

Woody client

- "Groupserver" concept:

- classic NFS root concept

- shared read-only

/usr - Client system - almost - completely on server (except for

/varand/tmp)

- Old server hardware

- Hardware maintenance painful

- Important binaries writeable (i.e.

/sbin/init) - No automatic upgrade for anything outside

/usr- I. e. no automatic security updates for

/bin,/sbinbinaries - No automatic distribution of

/varor/etcfiles

- I. e. no automatic security updates for

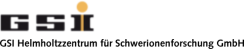

Into the future ...

- http://www.infrastructures.org/ - Site management best practices

- System administration should not work like craftsmen

- Infrastructure architects

- Configuration as condensed and central as possible

- FAI: Fully Automatic Installation framework

- Plan your installation and FAI installs your plan

- Debian-centric but very flexible

Into the future ...

- Cfengine - configuration management framework

- centralised and pull-based

- description of the intended configuration state

- not a sequential list of actions to be performed

- Use of Debian tools (Package management, Debconf) wherever appropriate

- Debian repositories used:

- Debian, Debian-Security, Backports.org, Debian volatile, GSI repository

Into the future ...

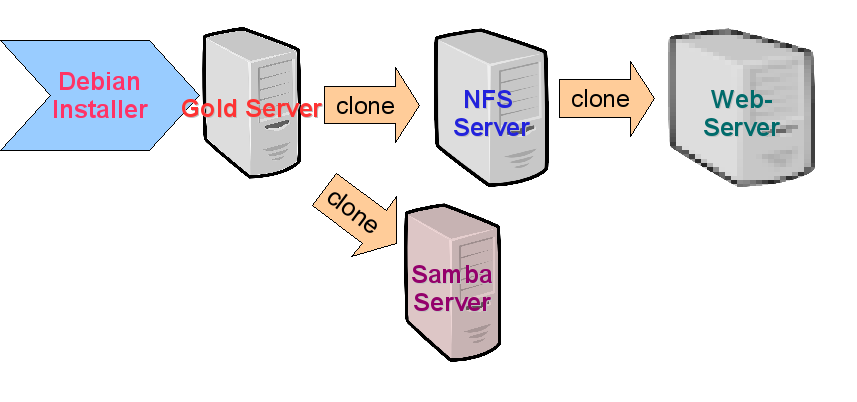

- More services - increased diversification

- Divide installation and configuration into host classes

- Keep It Small and Simple - only install what's required for a service

- Installation and configuration system excellence indicator:

- Reinstallation to service production state should be

- completely unattended and

- faster than a restore from tape backup

- Reinstallation to service production state should be

... back to the past?

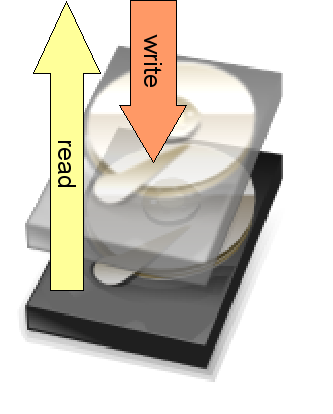

- NFS root reinvented:

- Single system image

- Shared by all clients

- managed with cfengine

- completely distinct from the server system

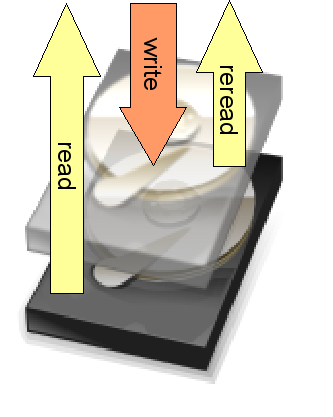

- read-only root filesystem

- unionfs filesystem

- All host-specific files in a central Subversion repository

- Accessed via WebDAV/davfs

- Single system image

Pros

- Improved security

- Difficult (impossible?) to hack

- Updates even when the box is down

- ready for dual boot / multi boot

- Improved reliability?

- Improved performance (new hardware)

- Instant installation (~ 2 minutes)

- Can be performed by the operators

- Ease of administration

- Condensed and centralised

... and Cons

- Completely reliant on the network

- Inferior performance compared to local installation?

- Installation time irrelevant if installation request response time is in size of days?

On and on and on and on ...

- Create NFS root image with FAI

- Move to 64bit

- Upgrade to Etch

- Ubuntu?

- GSI Knoppix CD?

- failover NFS-Cluster

- load-balancing?

- Build a complete test environment

Edit | Attach | Print version | History: r13 < r12 < r11 < r10 | Backlinks | View wiki text | Edit wiki text | More topic actions

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding GSI Wiki? Send feedback | Legal notice | Privacy Policy (german)